Formal Language

2024-04-26

2023-08-29

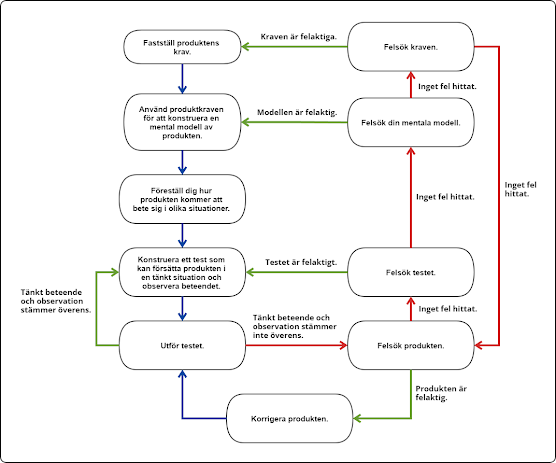

Felfri produktutveckling

- Konstruktionen fungerar inte som det var tänkt på grund av oförutsedda beroenden.

- Ett krav är feltolkat.

- Fel version av underlaget används vid tillverkning.

2022-03-19

A Modular Architecture for Embedded Software

Organizing a software application into modules is a powerful way to manage complexity. Modules are reusable, development can take place in parallel and they can be tested separately.

In this architecture we design reusable modules that communicate via typed signals. It is not rocket science. It is the application of a few well known concepts that will make your code feel lighter, truly modular, straightforward to test and easier to change and reason about.

Basic C Modules

In the C tradition a module is a pair of files, one public header file and one private implementation file. The module is accessed through an API of public functions. Modules of this kind are very useful and we will use them as a foundation for the architecture presented below.

Signals

Embedded control systems process signals and events going in and out of the system. A module can be seen as a miniature system. We want signals to be passed to modules via function calls. This is important because it gives us full control of how to implement the functionality in the module. The input function is called when the signal changes. An event can be modeled as a signal with type void.

As an example we will use a module that receives a voltage signal as input. The C type is uint32_t and the engineering unit is mV. A scheduler event will drive the internal processing. Nothing prevents the input function to do all the work, but for the system as a whole it is often better to delegate computation to the scheduler event function. It is called periodically by the main scheduler or executed by a dedicated thread. This is similar to using a clocked flip-flop in a digital circuit to limit the logic depth of gates in sequence. In software we want to limit the call depth. The drawback is increased latency.

static uint32_t voltage_mV = 0;

static uint8_t voltage_changed = 0;

void voltage_sink_input(unit32_t value)

{

voltage_mV = value;

voltage_changed = 1;

}

void voltage_sink_scheduler_event()

{

if (voltage_changed)

{

voltage_changed = 0;

/* Do stuff */

}

}

Instances

The module above can only be used once in the system. To be able to reuse the module we need to introduce the concept of an instance. The instance is represented by a struct containing local data. It is passed to all input functions.

#include "stdint.h"

typedef struct {

uint32_t voltage_mV;

uint8_t voltage_changed;

} voltage_sink_instance_t;

void voltage_sink_input(void* inst, uint32_t value)

{

voltage_sink_instance_t* self = (voltage_sink_instance_t*)inst;

self->voltage_mV = value;

self->voltage_changed = 1;

}

void voltage_sink_scheduler_event(void* inst)

{

voltage_sink_instance_t* self = (voltage_sink_instance_t*)inst;

if (self->voltage_changed)

{

self->voltage_changed = 0;

/* Do stuff */

}

}

A macro can reduce the typing, but I will not use it here for clarity.

#define SELF(T) ( (T)* self = ((T)*)inst )

It is also useful to have the start state of a module instance defined in a single place.

#define VOLTAGE_SINK_START_STATE { \

.voltage_mV = 0, \

.voltage_changed = 0 \

}

Module Decoupling

The purpose of the void* inst in the call signatures is to decouple the modules from each other. A module that outputs a voltage signal should be able to send it to any module that accepts such a signal, not only the modules it knows about. To send a voltage signal to an instance, all we need is a pointer to the following tuple.

typedef struct {

void (*fn)(void* inst, uint32_t value);

void* inst;

} uint32_mV_input_t;

Outputs

Now we can build a voltage source that can send signals to the voltage sink. It has the ability to send the signal to a list of inputs.

typedef struct {

uint32_mV_input_t** output;

uint32_t next_value;

} voltage_source_instance_t;

void voltage_source_scheduler_event(void* inst)

{

voltage_source_instance_t* self = (voltage_source_instance_t*)inst;

uint32_mV_input_t** output = self->output;

while (*output != 0)

{

(*output)->fn((*output)->inst, self->next_value);

output++;

}

self->next_value++;

}

Interconnect

Now we can build a system where these two modules are connected.

voltage_sink_instance_t sink = {

.voltage_mV = 0,

.voltage_changed = 0

};

uint32_mV_input_t input = {

.fn = voltage_sink_input,

.inst = &sink

};

uint32_mv_input_t* inputs[] = {&input, 0};

voltage_source_instance_t source = {

.output = inputs,

.next_value = 0

};

void main(void)

{

while(1)

{

voltage_source_scheduler_event(&source);

voltage_sink_scheduler_event(&sink);

}

}

Request-Response

An output signal is not a request for another module to do something. It is only a statement about the current value of a signal in the system. If you want you can use signals to build request-response protocols. A module can be programmed to expect a response on input signal B soon after assigning a new value to output signal A. Connections made to other modules then need to match this expectation.

Complex Signals

Signals can have composite values that are too large for a primitive C type. We can use a 32 byte buffer as an example. We need to pass a pointer to the buffer to the input function. Signals should behave like values which means they should not be modified after transmission. This means we don't need to make copies of the signal for each downstream module. They can alll refer to the same memory. When all modules have stopped using the signal it can be reclaimed by the source to represent a different signal. To know when this is ok we can use a reference counter.

typedef struct {

uint8_t buffer[32];

uint32_t refcount;

} uint8x32_sample_t;

typedef struct {

void (*fn)(void* inst, uint8x32_sample_t* value);

void* inst;

} uint8x32_sample_input_t;

The output module increases refcount for each receiving module and the receiving modules decrease it when they are done. The output module probably needs a pool of signals that gets reused over time.

Hardware

An MCU has a lot of peripherals and these need to be mapped to input and output signals. For GPIO we can assign each pin an instance of the GPI and GPO modules depending on if they are inputs or outputs. To generate new values the GPI instances needs to be polled with a call to an event input. This can be done by a poll event from the module that wants to know the pin state, or it can be done by a scheduler event. An interrupt can also be used as a poll event. The GPO module doesn't need a separate event. It can change the pin immediately in its input function. SPI, CAN, UART and ADC can in similar ways be mapped to input and output signals.

It can be tempting to skip the signal abstraction and access peripheral registers directly from any module that needs the data. Take system time for example. It can easily be read directly from the hardware. This is simple but makes it more difficult to test the module. A more test friendly way to distribute time is to add it to the scheduler event signal. Use uint32_us or uint32_ms and pass it to the module in the scheduler event. In a test case the time can be simulated to test edge cases that are difficult to test in real time.

Peripheral register access should be limited to a few modules dedicated to hardware interactions. All other modules should only use input functions to send and receive data.

Threads

If you use an RTOS you probably want each module instance to be run by its own thread. The input functions will put the signal in a queue which is monitored by the thread that runs an event loop or state machine to implement the behavior of the module.

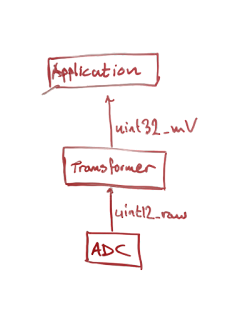

Layers

It can be helpful to categorize the modules into a hardware layer and an application layer. In the application layer we want to use a few domain specific engineering units, for example float32_V, int32_us, uint16x32_mV that fits the problem and are efficient to use on the hardware. The hardware layer on the other hand works with raw binary data, for example samples from an ADC. They need to be converted to engineering units when passed to the application layer. Lets say we have a 12 bit ADC with a range of 0-5V. We want to measure a signal that can be 0-50V. A resistor net reduces the signal 10 times before it hits the ADC. The conversion formula will be uint32_mV = uint12_raw * 50 * 1000 / 4095. This can be performed by a conversion module with an uint12_raw input and an uint32_mV output.

Drawbacks

The big drawback when decoupling modules via signals is that you can't follow the program execution by reading the code in the output function. The input function to be called is hidden in a runtime variable. The static program partly becomes a virtual machine executing a dynamic graph of signal connections. You will need supporting documentation where modules, signals and connections are sketched out. This is also true for many techniques used in object oriented programming.

Summary

- Modules receive signals with public functions and transmit signals with function calls.

- Timer/Polling/Scheduler/Interrupt Events are signals of type void.

- An instance of a module is represented by a struct with local data that is passed to all input functions.

- Initialization code creates instances and connects outputs with inputs.

- Outputs depend on {(*fn)(), *inst} tuples, not specific input functions.

- The hardware layer consists of modules that map signals to MCU peripherals.

- All but the most primitive of modules are activated by scheduler events or by threads.

2022-01-22

Induction is Very Useful as a First Guess

Second edition: I was wrong. I have written about it before, but I forgot: induction doesn't exist. The number of possible theories for any set of observations are infinite. Brett Hall showed me the right path forward. We don't use induction to find knowledge. Instead we use a fundamental theory: There are regularities in the world. We use this theory to quickly draw conclusions from a few examples, also when the perceived regularity requires complicated transformations to be extracted from the experience. We hold on to the knowledge until we find a convincing counterexample, either through thinking or by observation.

Is it even possible to form a theory of an irregularity? Yes, I think so. We can use chaos theory and no-go theorems as examples. These theories can't be used to make predictions. It is more like a warning sign. Don't spend energy looking for regularities here, there are none.

First edition: Hume showed us that induction is not the mechanism we use to create knowledge but couldn't find an alternative explanation. Popper solved the problem using conjectures and refutations. We guess how the world works and our guesses can be falsified by things we already know and by cleverly constructed experiments when we lack convincing reasons to choose between competing theories.

But Popper does not tell us how we come up with conjectures. For this, induction is very useful. It lets us find patterns, regularities and causal relations that works surprisingly well as first guesses. If you believe that the sun will rise tomorrow because it has done so all days that came before, it will serve you well for billions of years. Only if you fail, with more time, to figure out how the solar system actually works - which will falsify your initial inductive guess - it will threaten your survival.

2021-10-03

Knowledge Creation and Induction

Here are my thoughts after listening to Bruce Nielson's excellent breakdown of the current state of artificial knowledge creation in The Theory of Anything podcast episode 26: Is Universal Darwinism the Sole Source of Knowledge Creation?

Hume explains why induction can't work. Induction is the belief that we can derive theories from observations. At least that is my understanding of the concept. This is the definition I will use as a starting point here as I dive into the subject of the podcast.

The only way to perceive reality is through observation. We also know that our theories work pretty well. So, where do they come from if not from observation? Popper says they are guesses. Conjectures. We just make shit up. That sure explains why people are so weird. We make advances in our understanding of reality by combining theories that give support to each other and to the observations we make. We definitely use observations. We have no other way to get access to reality. But they come second, after the theory is conjectured. The more support a theory needs for it to work, the better it is. Theories that don't rely on support from other theories or observations are bad because they are easy to vary.

Some critics of current AI algorithms say that they are based on induction, that they are inductive. This is a bit puzzling because induction doesn't exist and machine learning do. And it does create knowledge. Also, just because a process uses observations doesn't make it inductive as we have seen above. I think the claim that an algorithm is inductive is just plain

wrong. Induction is a failed philosophical approach without support in the physical world, where machine learning lives. An alternative formulation of the criticism could be that current AI researchers believe that induction works, therefore they will never be successful. We come to that conclusion because machine learning uses observations. So does Popper's theories, which leads me to believe we can all agree to drop that claim as well.

Another criticism is that machine learning doesn't create any new theories, no new explanatory knowledge. Therefore it cannot solve the problem of AGI, Artificial General Intelligence, which requires universal theory creation. Machine learning do create knowledge, but not of the explanatory kind. The theory involved in machine learning, e.g. how neurons work and their topology, comes from the programmer, not from the algorithm.

I'm not sure the critics will be right here either. I do not rule out that it is possible to find a topology that can excel at the task of universal theory creation. It would be yet another jump to universality to put under our belt. We don't appear to have found it yet though. Whatever theory we need to come up with to realize AGI, it will be created by a programmer. On its own, the fact that programmers create theories cannot be held against an approach that attempts to solve AGI. If it could, the task to create AGI would be proven impossible, and we have proofs of the opposite. Once created, the AGI algorithm will be able to rediscover itself, similar in kind to a metacircular interpreter that can interpret itself.

It doesn't seem to be very easy to recreate oneself though. Just look at our own struggles. Are we not universal explainers after all? Do we have a blind spot that will prevent us from achieving AGI? We have the theory of computation, the Turing machine and our ability to program it. Us having a blind spot appears to be ruled out. Theories that solve AGI just seems to be very rare and all we can do is keep guessing.

2020-12-07

- Every thing is a pattern

- But, but, but, physical things, like blueberries. They must not be just a pattern?

- They are. The key feature that lets us perceive a lump of matter as a blueberry is how its atoms are arranged, a pattern. The arrangement makes the lump good at reflecting blue light, among other things.

- The atoms then? They are real.

- They are also just patterns of even smaller things. Or a collection of measurements made by man made apparatus.

- The light?

- No, not even the light. Everything you can identify as an isolated thing is just a pattern, specific arrangements of smaller things or measurements. Extended in time or space. To be able to recognize a blueberry, its pattern needs to be interpreted by a computing device, i.e. it becomes software for the device, which transforms it into a different pattern. It could become an idea stored in your memory or a rearrangement of your muscular pattern, i.e. movement.

Physical things are patterns. Abstract things are patterns. Everything are patterns.

The things we call physical are the patterns that exclusively occupy a part of physical reality. No other pattern that exists at the same abstraction level can claim the same space at the same time. Some of our senses like touch and vision have evolved to measure physical patterns.

All patterns are real - by that I mean that they exist somewhere in spacetime - also those that traditionally have been regarded as abstract. Thoughts are real. Numbers are real. Genes are real. They are patterns that can change other patterns, for example the patterns we categorise as physical.

Abstract patterns are substrate independent. If you try to understand them by investigating what the substrate is doing - the particles - the meaning is lost.

Abstract patterns of information are easy to create as long as we have a universal computer, e.g. our mind. When we try to create patterns in the physical world we encounter resistance. Most attempts don't work out the way we would like them to. The resistance is the laws of physics.

Evolution produces computation devices that take patterns as inputs and turn them into output patterns. Life is computation. Computations that can avoid being destroyed by reality stays around. New kinds of computation are created by mutation.

Memes are patterns that run on the reality simulator running on your neurons. They can create copies of themselves in other people's minds. To be able to do that they must survive in the simulated reality and they must create patterns in the physical reality that can be perceived by other people's senses. Memes evolve due to errors in this process. New memes can also arise from our creativity, i.e. ideas trapped in our minds that evolve into a pattern capable of escaping.

It could be that there are physical things out there in reality, but we can only ever understand and process patterns, because our mind is a computing device and patterns are the only thing it can know anything about.

P.S. In Objective Knowledge, Popper uses the concepts of first, second and third world to describe the physical, the personal and the memetic worlds. The laws of physics (first world) created the first replicators by deterministic application of forces. The replicators are then able to preserve the knowledge of replication through the feedback loop of replication. Mutations create new knowledge that hangs on to the stabilising attractor of replication. Neo-Darwinian evolution takes place in this constructed environment maintained by replicators (world 1.5?). The auxiliary knowledge gives rise to new computations that are not just replication. At first it only performs simple transformations from measurement (pattern recognition) to action (pattern creation). When these computations become more and more complex, a simulation of the first world starts to emerge in the mind of the organism (second world) - a virtualisation of physical reality. A new kind of knowledge preserved by replication across minds can arise. It takes the form of memes in the third world. This knowledge survives the death of any particular person. This is not true for knowledge instantiated in the mind of a single person that didn't become memetic. This drive we have to create memetic knowledge and our intuitive recognition of good memetic knowledge has emerged from genetic mutation. How can we recreate this complex computation and run it on a computer? Just any recreation will not do. We also want this artificial person to be part of our third world - to share our memes. D.S.

2020-11-28

Product Information Index - Pii

Track your product structure and dependencies in a fun way. Automate the tedious task to collect and sort the information generated during product development. Query the result to find unimplemented requirements, failed tests, recent changes or just browse around and impress yourself with what you have accomplished.

Pii helps us with the complex work of tracking artifacts and their relationships during product development. Pii integrates with existing infrastructure and processes that you already use for product developmen and documentation. Pii automatically tracks changes and their impact on related artifacts. An artifact is the abstract idea that represents a physical thing or a digital record that exist in different versions. Custom data formats are integrated with Pii using Python funcions for parsing and categorising information.

Role-Relationship Model

Pii is based on a relational database with a browser frontend. Entities are represented by UUIDs and are associated with other entities and values through relations. Entities are assigned roles (types) dynamically. Roles decide which relations the entity can join. This is unlike traditional entity-relationship modeling where entities are modelled as relations. In the relational model of Pii, an entity is just an UUID taking on different roles to participate in relations. Let's call it an RR-Model.

Tracking Changes

Tracking changes in the contents of a file is a basic capability of Pii. A single line in tracker.py adds a file to Pii.

a = trackFile("path/to/filename", "Content-Type")

Initially, one MutableE entity representing the file and one ConstantE entity that represents the contents of the file are added to Pii. MutableE and ConstantE are roles. They are associated through the relation ContentEE. The MutableE entity is also assigned the role FileE with the additional relation PathES.

a = UUID()

a -- EntityE

a -- IdentityES -- "filename"

a -- MutableE

a -- FileE

a -- PathES -- "path/to/filename"

b = UUID()

b -- EntityE

b -- IdentityES -- "filename 2020-10-14T19:37:10.121"

b -- ConstantE

b -- ContentTypeES -- "Content-Type"

b -- ContentEB -- <11010...>

b -- ShaES -- "E0EE5BC391BB02D9891139EBBA3C674CFA1CA712"

a -- ContentEE -- b

When a change to the file content is detected an additional CostantE is created representing the new content.

c = UUID()

c -- EntityE

c -- IdentityES -- "filename 2020-10-14T19:42:13.443"

c -- ConstantE

c -- ContentTypeES -- "Content-Type"

c -- ContentEB -- <10110...>

c -- ShaES -- "69CE10436A9247A38A07B07BDC5A02B4CAAC3CF1"

a -- ContentEE -- c

Now we have two instances of the file stored in Pii, both related to the same file.

Schema

Column types in Pii are the usual suspects String, Timestamp, Binary Integer, Real and Entity.

- String S is UTF-8 encoded text. (sqlite3 Text)

- Timestamp T is ISO8601 date and time. (sqlite3 Text)

- Binary B is a sequence of octets. (sqlite3 Blob)

- Integer I is an integer number. (sqlite3 Integer)

- Real R is a real number. (sqlite3 Real)

- Entity E is an UUID. (sqlite3 Text)

All relations - both unary and binary - have the column left L. Binary relations also have the column right R. L and R contain entity UUIDs or values from the value types. The type of the columns are shown with letters in the name of the relation. Relations also have columns for creation time T and association A.

create table ConstantE (l text, t text, a text);

create table ContentEB (l text, r blob, t text, a text);

Rows in relations are never removed or changed, only appended. The A column indicates if the relation should be realized (True) or if it should be broken up (False). This enables us to track the state of the relation over time, undo changes and construct views with cardinality n:n, 1:n, n:1 and 1:1 - all present at the same time for application queries. The schema can be seen as an extension of the 7:th normal form.

For every relation, four views will be created that represents the cardinalities. For the relation ContentEE the views ContentEEcnn, ContentEEcn1, ContentEEc1n and ContentEEc11 will be available. These views only contain currently realized associations and therefore the column A is not needed.

create view MutableEcn as select l, t ...

create view ContentEEcnn as select l, r, t ...

create view ContentEEcn1 as select l, r, t ...

create view ContentEEc1n as select l, r, t ...

create view ContentEEc11 as select l, r, t ...

Information Model

The growth of the schema is open ended. An entity starts out as being only an UUID which is not even stored in the database. Roles are then added to the entity. An entity can take on just one or all roles in the system at once. New roles can be added at any time. It would be tedious if the presentation layer had to search through all relations to find a particular entity. We need to add information that the presentation layer can use when it wants to display an entity and its relations. We want to specify if an entity role (unary relation) participates on the left or the right side of a binary relation. We also want to know the roles a specific entity has. The relations LeftSS, RightSS and RoleES do this for us.

a -- RoleES -- "EntityE"

a -- RoleES -- "MutableE"

a -- RoleES -- "FileE"

b -- RoleES -- "EntityE"

b -- RoleES -- "ConstantE"

c -- RoleES -- "EntityE"

c -- RoleES -- "ConstantE"

"FileE" -- LeftSS -- "PathEScn1"

"ConstantE" -- LeftSS -- "MimeTypeEScn1"

"ConstantE" -- LeftSS -- "ValueEBcn1"

"ConstantE" -- LeftSS -- "ShaEScn1"

"MutableE" -- LeftSS -- "ContentEEc1n"

"ContentEEc1n" -- RightSS -- "ConstantE"

Note that the value types S, B, T, I, R are not added to RightSS. They are derived from the relation name when needed. It is only the entity roles that we need to model in this way.

From now on whenever I assign a role to an entity, e.g. x -- FileE it also means that there will be an x -- RoleES -- "FileE" row added to RoleES in addition to the row in relation FileE. And instead of specifying the LeftSS and RightSS relations I will use the following shorthands to define the information model.

FileE -- PathEScn1

ConstantE -- ContentTypeEScn1

ConstantE -- ContentEBcn1

ConstantE -- ShaEScn1

MutableE -- ContentEEc1n -- ConstantE

All entities should have the role EntityE with the relation IdentityES. This is the human readable name of the entity.

EntityE -- IdentityEScn1

All relations are always optional for an entity to participate in. Any entity that hasn't specified an identity will just not have a value for that relation.

The shape and display color of an entity can be changed by its roles. The last role assigned to the entity has precedence.

"MutableE" -- ShapeSS -- "box"

"ConstantE -- ColorSS -- "white"

Embedded Records

Now lets assume the file is a requirements specification that contains requirements. We use a function that can parse the document and extract the requirements. Add a second line to tracker.py.

rs = trackRequirements(a)

The requirements that are found will be added to Pii.

d = UUID()

d -- EntityE

d -- IdentityES -- "Requirement 17.3 Make it fast! v6.1"

d -- MutableE

d -- EmbeddedE

a -- ContainerE

a -- MemberEE -- d

e = UUID()

e -- ConstantE

e -- ContentTypeES -- "text/plain"

e -- ContentEB -- <11010...>

e -- ShaES -- "2CAF6FBAE0B00796E2B59656660941BC331FDEED"

e -- EmbeddedE

c -- ContainerE

c -- MemberEE -- e

d -- ContentEE -- e

# Information Model

ContainerE -- SectionEEc1n -- EmbeddedE

Both the mutable and the constant requirement entities are assigned the role EmbeddedE. This is not strictly necessary and could be seen as duplication of information. This is not a problem as Pii is not the original source of the information, it just models information already available elsewhere and it will never be updated manually. Therefore it is harmless to add redundant relations that will simplify navigation for the user.

We could also use EmbeddedE for FileEs that are stored in ContainerE zip FileEs.

Note that we have not assigned the role RequirementE to the MutableE. This is a role we want to save for a higher level entity.

Versions

Different versions of an artifact form a collection that we want to keep together. The low level change tracking that we get with ConstantE and MutableE are not suitable for this task. We may for example want to have several versions available in the file system at the same time and we may want to maintain parallel branches. We will introduce two entitiy roles called VersionE and ArtifactE and extend the MutableE d from above representing the requirement with VersionE. The artifact will also have the role RequirementE.

d -- VersionEf = UUID()

f -- EntityE

f -- IdentityES -- "Requirement 17.3 Make it fast!"

f -- ArtifactE

f -- RequirementE

f -- VersionEE -- d

# Information Model

ArtifactE -- VersionEEc1n -- VersionE

Branches

A branch is an entity that is both an ArtifactE and a VersionE and it is used to group versions together for easier navigation. A dot in a version number indicates that we have a branch. We will rebuild the structure above by inserting a branch between the ArtifactE f and the VersionE d.

g = UUID()

g -- EntityE

g -- IdentityES -- "Requirement 17.3 Make it fast! v6.x"

g -- ArtifactE

g -- VersionE

f -- VersionEE -- g

g -- VersionEE -- d

The VersionE can be associated with both the top ArtifactE and the branch ArtifactE. This is probably a correct representation of how the model has evolved over time. First we have the branchless versions 1, 2, 3, 4, 5, 6, 7 directly associated with the top ArtifactE f. Then the need to branch version 6 arises and we create the branch 6.x where we put both version 6 (aka 6.0) and 6.1. Nothing prevents us from also associate ArtifactE f with 6.1 directly if we want to.

Designing and Building Things using Parts, Materials, Tools and Instructions

A product design is modelled as an ArtifactE. To be able to build items of this design we need to know which parts and materials to use, what tools we need and what instructions to follow. These four categories are in turn modelled as ArtifactEs and can be broken down in the same way.

ComposedE -- BoMPartEE1n -- BoMPartE

BoMPart -- CountEIcn1

BoMPart -- PartEEcn1 -- PartE

ComposedE -- BoMMaterialEEc1n - BoMMaterialE

BoMMaterialE -- AmountERcn1

BoMMaterialE -- UnitEScn1

BoMMaterialE -- MaterialEEcn1 - MaterialE

BoM stands for Bill of Materials which is a list of the things and how much of each we need to build something. The ArtifactE we are designing will come in different versions over time. Each VersionE entity of the ArtifactE will also have the relations above, but they will associate with other VersionEs of the ArtifactEs that are used as parts in each particular case.

Software and other information ArtifactEs seldom use more than one of each of the components that they are built from (licensing conditions could create exceptions). This motivates a simpler kind of relation in these cases.

AggregateE -- ComponentEEcnn -- ComponentE

We may want to use this relation for the ArtifactEs of physical products as well and only specify the BoM for the VersionEs. The reason is that the amount and exactly what is needed to build something can vary over time, but the ArtifactE represents all VersionEs of itself and should therefore not be too specific.

Finally, here are the relations for tools and instructions.

ManufactureE -- ToolEEcnn -- ToolE

WorkE -- InstructionEEcnn -- InstructionE

ElectricalE -- SchemaEEcnn -- SchemaE

MechanicalE -- DrawingEEcnn -- DrawingE

CompiledE -- SourceCodeEEcnn -- SourceCodeE

Manufacture and work should be read as nouns, e.g. a work of art, the manufacture was made of wood.

As we have seen above, digital records that are the result of the build process can be stored directly in the VersionE entity when it is assigned the role MutableE. Physical products are associated with the VersionE through the ItemEE or ProduceEE relations. The VersionE is assigned the BlueprintE role or the RecipeE role depending on what kind of product is produced.

BlueprintE -- ItemEEc1n -- ItemE

RecipeE -- ProduceEEc1n -- ProduceE

ProduceE -- UnitEScn1

ProduceE -- AmountERcn1

If you want to organise your output in batches then let the batch entity have both the BlueprintE+ItemE roles or the RecipeE+ProduceE roles just like we did with branches.

Batteries not Included

Products that doesn't come with all necessary parts to function as indended are called integrated. This is typical for software that dynamically loads modules at runtime.

IntegratedE -- ModuleEEcnn -- ModuleE

Specifications

Specifications like the requirement we modelled earlier are someting that dictates what other artifacts must be or behave like.

SpecificationE -- ImplementationEEcnn -- ImplementationE

Correctly tracked, this relation can be used to find out which changes made to specification still remain to be implemented. A key feature to fully comply with traceability. If an AnrifactE is an ImplementationE of a SpecificationE, then all VersionEs of the SpecificationE should have at least one ImplementationE among the VersionEs of the ArtifactE. If some are missing then we know we have things left to implement.

It is also valuable to track which TestEs aim to refute the ImplementationE with input from the SpecificationE.

SpecificationE -- TestEEcnn -- TestE

Note that a test can be an implementation of a test specification at the same time it is a refutation test for a product specification.

Tests

Tests can be organized in a hierarchical structure using the aggregate/component roles and relations. The test result associates a version of the test target with a specific version of the test that was performed.

TestResultE -- TestTargetEEcn1 -- TestTargetE

TestResultE -- TestEEcn1 -- TestE

Guides

Guides tells you how to use things. It can be user guides, service guides, and so on.

ApplianceE -- GuideEEcnn -- GuideE

Retired Entities

When an entity no longer is actively participating in the product structure it will take on the role RetiredE.

x -- RetiredE

I Want Pii

Download the code from https://github.com/TheOtherMarcus/Pii.

-

I have written a simple web client for the Music Player Daemon (MPD). It is suitable to use on mobile devices, like my Android phone. It al...

-

This is a repost of a couple of submissions I made on the ST Community site. It seems they are not searchable on the site, nor on Google, so...

-

The game Laser Trap: Dexterous Escape uses dedicated hardware modules called traps for its implementation. Each trap has a laser module, wh...